Welcome again to AR Week here on Pocket-lint. If you haven’t already had your mind blown as to the future that augmented reality can bring us, then you’ve got a little catch up reading to do but, don’t worry, there’s a wealth of articles from us yet to come.

For the moment though consumer AR is somewhat stuck at the level of holding your phone out in front of you to get some guidance on where your nearest train station is - something that could arguably be done on a standard map. So, what is it that’s going to take us from A to B? How are we going to get to that instant, seamless, information-fuelled future? Well, here are some of the key devices that will shoot augmented reality up to the next level. Oh, and if you’re still not sure what AR is all about, then that link should bring you nicely up to speed and without too many long words either.

AR glasses

The next big step for augmented reality is to get right up in our faces and at all times too. There’s a number of innate issues of having to use your mobile phone as the AR window on the world. Holding the thing at arms length in front of you is tiring, it looks a little silly, it rather invites having your phone taken off you and there’s an excellent chance of swinging round and accidentally punching someone in the face. There’s also quite a few AR tasks out there such as performing maintainance and repairs that are going to need you to have your hands free - all what many might class as “barriers” to AR getting much further.

Possibly, worse than all of those is the fact that the view which you get through your smartphone camera isn’t a proper 1:1 picture of what’s going on. Unlike all the nice adverts for Layar and Wikitude, your display doesn’t fit in precisely with the world around you with just a neat area framed off but otherwise in synch with the scale that you see; and that’s an issue when you're trying to navigate and get the real to line up satisfactorily with the virtual as you move the phone about. All of these issues can be fixed by doing all the display work through a pair of AR glasses instead.

Fortunately, there are plenty of companies working on such a solution as we speak. Vuzix are the ones that most will have heard of and probably the only manufacturer out there at the moment offering a version of this kind of hardware for anything like consumer prices. The Vuzix Wrap 920AR glasses capture the world through twin cameras on the front of the lenses which broadcast a live signal of the world around you onto mini screens right in front of your eyes, which are the equivalent size of a 67-inch TV as seen from 3m away and with VGA resolution.

The idea is that you plug them in to your computer and they can make whatever virtual model or object you’re looking at appear on top of a real world surface such as your desk, and because they work with a different screen in front of each eye, it’s easy enough to make the object 3D as well. The trouble with them is that they’re not mobile enough, they’re still rather chunky and unsightly and they also happen to cost around £1,499. That said, the head tracking is solid and they definitely work.

A step up from this AV Walker system as shown off in October 2010 at Ceatac in Japan. The system, as designed in partnership by Olympus and phone maker NTT Docomo, allows the user to see the real world without running the feed through a camera and video display. It’s a genuine real aspect through a pair of normal glasses weighing just 20g. The only difference is that mounted to one of the lenses is a tiny projector which projects the virtual, computer generated information directly to the peripheral vision of one of the user’s eyes. Look to the sides and the software will show you what shops are around you. Look up and you’ll get the weather. Look straight ahead and the application will revert to navigation mode and show you which way you need to travel.

The glasses and projector are connected to a smartphone which does all of the computational and graphics work. It also provides the GPS location while adjusting the images according to the head tracking inputs received through the glasses to detect the motion of the user. It still has to rely on the rather rudimentary mobile phone based geo-techniques, without using any actual live recognition of the real world around you, but it’s a decent step forward and an interesting demonstration nonetheless.

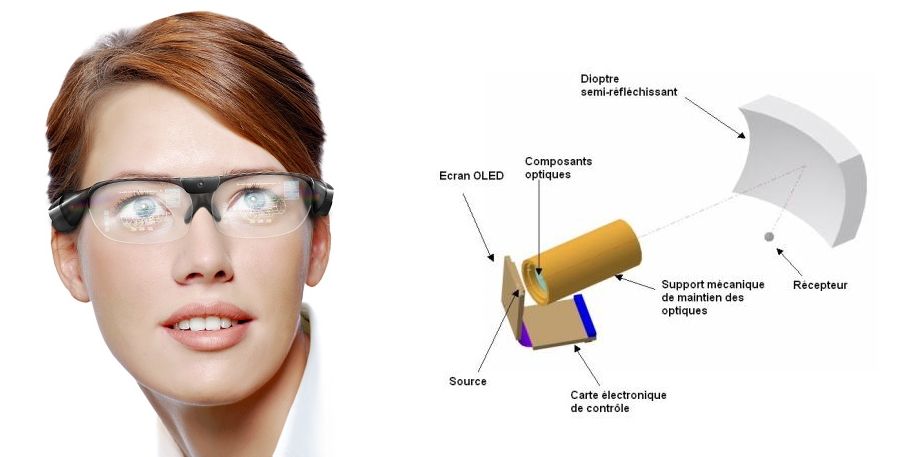

French company Laster, in partnership with an assortment of research centres and optical institutes across the country and other parts of Europe, has a product already working in the professional world with a system that can recognise its surroundings. The patented Enhanced View technology works by having a pair of see-through glasses with a tiny camera placed on the bridge. It can then send the visual camera information wirelessly back down to whatever device is running the application - mobile phone, pda, laptop, games console, portable DVD player - which can in turn project the correct graphics lined up properly on your visual field courtesy of two pic projectors in the arms of the glasses which display the virutal images at up to 600x800 resolution onto the backs semi-reflective lenses. The whole image is effectively the size of an 88-inch screen as seen from 3 metres and it can overlay text, images and video.

Speaking to Laster, the company expects to be launching a version of the glasses into the consumer market in March 2011 with a product priced between £600-800. The hope is to have something with a complete field of view, with a better weight and ergonomics and at an affordable price point in 2 to 3 years time. Exciting and ambitious stuff.

AR contact lens

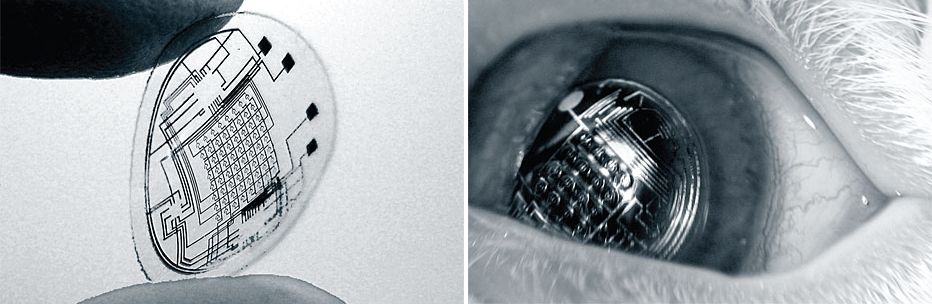

The next step from the AR glasses has to be AR contact lenses and, as fantastical as it sounds, there is a team at the University of Washington who have a prototype already. Led by professor Babak Parviz, the group has managed to augment a standard polymer-based contact lens with ultra-thin metal circuitry consisting of components at around one thousandth of the thickness of a human hair, and it didn’t seem to bother the rabbits who wore them for the 20-minute trials.

The lens houses basic wiring as well as a single red LED and an antenna ready for wireless communication with whatever device is set to be running the AR application. What’s quite astounding is some of the challenges the team overcame including the tricky problem of how to get the metal components to adhere correctly to a polymer surface - materials that are notoriously tricky to match together. Parviz and co have come up with a system of micro-fabrication which means that the components self assemble, once in the eye, by floating into specific receptor sites on the surface of the lens via a system of capillary action - the same as plants use to draw up water from soil.

Sadly, the problems don’t end here. The lens in the test was not actually switched on because there are some pretty huge issues which lie ahead. First of all, there’s one of safety. If people have problems with the radiation from even a mobile phone’s antenna when held at the side of your head or sitting in your pocket, then how about the dangers when one is put right onto the thin surface of your eye? What’s more, a working AR lens is going to heat up and if it gets much hotter than 44 degrees centigrade, that’s going to start burning the surface of the cornea. Not very nice.

The bigger challenges are the ones of functionality. The human eye is used to resolving a sharp image at a comfortable minimal distance of around 25cm. So, how do you produce a picture right on the surface of the eye? The suggested approach is to adjust the angle of the incoming light from the LEDs so that the image focuses through the human lens behind the cornea and directly onto the retina. Of course, that’s all very well in theory but it’s going to take human testing to get that one right. A rabbit isn’t going to tell them when the LEDs look sharp.

Powering the lens is also an area the Washington team has to work on. It looks like the best method is going to be harvesting ambient radio frequencies although perhaps using the heat and movement of the human eye as well as the possibility of wireless power could help out..

Finally, there are the problems for the user of being able to see through all the gubbins to the real world, but Parviz is confident that there is enough space around the periphery of the contact lens to house the circuitry so as not to get in the way of what the eye is trying to see. It’s also going to be important from the point of view of others as well. At the moment, it’s very clear to see that someone is wearing a bionic lens; and for this kind of thing to take off, the public needs to accept it which is going to be about it not looking too weird. All quite a challenge, so don’t expect to get fitted for an AR contact lens for a good 30 years or more.

SixthSense

Far less invasive than something like a contact lens and more achievable in a shorter scale of time than AR glasses is something like the interactive projector system as invented by Pranav Mistry, a Phd student at MIT. Called SixthSense, the set up consists of an apparatus worn rather like a medallion that connects to an application on a mobile phone which takes care of all the computational and graphical tasks.

A camera facing forward at the top of the array takes in information of what’s in front of the user and most of the time is concerned specifically with an area as projected by a pico projector also hung around the user’s neck. The user can interact with the projection which is displayed on whatever surface is in front of them with a number of gestures as dictated by the thumb and forefinger of both hands. These digits are capped by coloured markers which the SixthSense camera can recognise and pass back to the mobile phone for the application to then perform whatever task has been demanded.

The best way of getting an idea of what it’s all about and precisely what kinds of tasks it can help with is by watching the video demonstration below. It’s 8 minutes long but well worth it, and if you haven’t seen it before, we do guarantee a small thunk as your jaw hits your desk.

Whether it's for photos, looking up information on products or for social interaction, there’s definitely a very near future for this kind of technology, and, even though it’s the largest and most obvious of all the AR devices so far, it’s very easy to see how it sits more easily into our social expectations. We already adorn ourselves with necklaces and it’s not a large step to see how the SixthSense can be housed in a more fashionable item or reduced to something small enough to be sewn into a garment. What’s more, one could imagine having four brightly coloured finger nails actually looking stylish cool and actually very future normal.

Perhaps the only draw-backs are the projector itself and the power of the recognition software. Pico projectors currently tend not to be that bright and are always going to be limited to be able to throw out a certain volume of display and only at a certain range of distance. Out in bright sunlight, one might have a few issues. Naturally, these things are only relatively new and doubtless the picos will come on leaps and bounds if needed.

Smartphones

Yes, we have these already and a very good thing too. It’s all very well doing AR parlour tricks with your computer and webcam but if we’re going to get this burgeoning technology really going, we need mobile computers to run those applications. Fortunately, smartphones are just the ticket and with every year they become more and more perfect for the job.

The arrival of Qualcomm’s Snapdragon SoC solution and now with the likes of the dual-core Tegra 2 set ups, mobile phones are more than capable of any computational and graphical tasks that augmented reality on the go requires. Throw into that 3G coverage becoming much better along with the development of 4G solutions and the connectivity to get all the information required to run these tasks from the Internet is also in place.

Where these devices are lacking at the moment is in tracking, geo-location, recognition, in the camera itself - as mentioned earlier - and the age old problem of battery life. There are better gyroscopes and accelerometers and more expensive, surveyor grade GPS software out there, but these kinds of things currently would push the price of such a device up into the tens of thousands of pounds. Fortunately, the likes of Qualcomm and Nokia are working on their chips to make a better job of what resources already lie inside our smartphones and recognition software is on its way to providing real time virtual/actual match-ups.

There’s still plenty of development to go but the point is that for the while, and into the foreseeable future, it appears to be these devices, or their grandchildren, that will be supply our AR power wherever we go.

For more information on what Qualcomm is doing with Augmented Reality please click: http://www.qualcomm.co.uk/products/augmented-reality