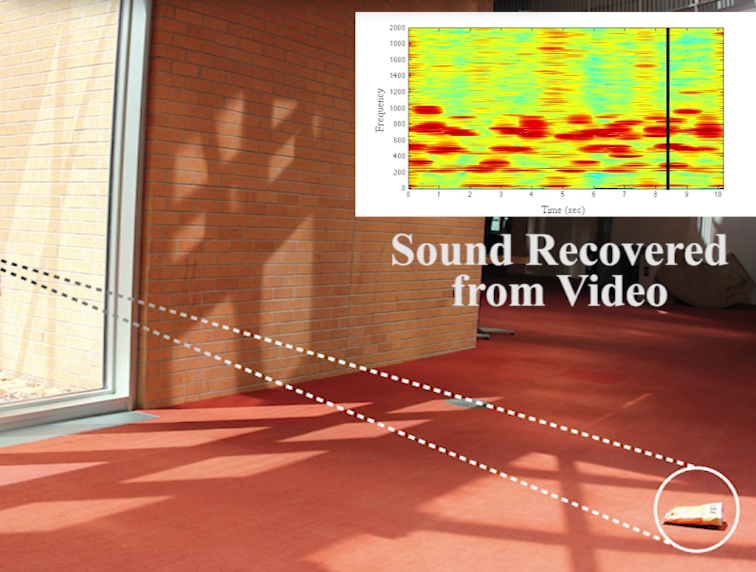

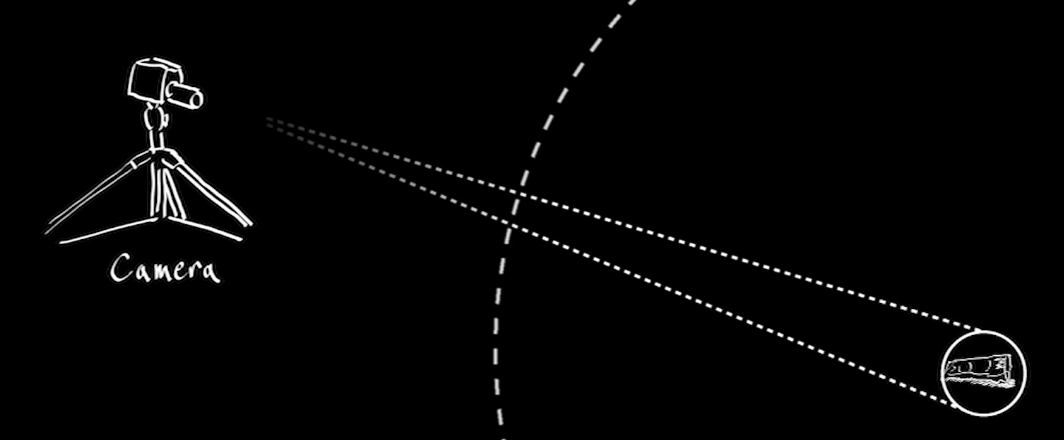

Researchers from MIT, Microsoft, and Adobe have developed a way to extract audio from vibrations of an object caught on video. In one experiment, they were able to reconstruct speech from vibrations off a potato chip bag. The bag was filmed from 15 feet away and behind soundproof glass.

According to The Washington Post, the researchers have not only indentified that tiny vibrations bounce off objects when sound hits, but they have also created an algorithm that can reconstruct sound and even intelligible speech from those tiny vibrations recorded on video. They've conducted several experiments that include pulling audio from aluminium foil, a glass of water, and a plant. They will present their findings in a paper at Siggraph 2014.

“When sound hits an object, it causes the object to vibrate,” said Abe Davis, a graduate student in electrical engineering and computer science at MIT and first author on the new paper. “The motion of this vibration creates a very subtle visual signal that’s usually invisible to the naked eye. People didn’t realize that this information was there.”

A high-speed camera is usually required to capture the tiny vibrations. Researchers used a camera capable of recording 2,000 to 6,000 frames per second, for instance, along with a rolling shutter, enabling them to record and then analyse vibrations that happen too quickly for the naked eye to see. Although the resulting sound reconstruction still isn't crystal clear, the possibilities of this technology are vast.

https://www.washingtonpost.com/posttv/c/watch?v=098665de-1c0c-11e4-9b6c-12e30cbe86a3

“This is a new dimension to how you can image objects,” said Davis, while also noting the technology could be used as another tool for forensic applications. “It tells you something about how they respond physically to pressure, but instead of poking and prodding at them, all you need is to play sound at them.”