Google has shown off the next version of Google Assistant at Google I/O 2019, demonstrating what we can expect from the new experience.

One of the challenges that Google faced was the quantity of data needed to interpret the voice commands. This, typically, leads to lag as Assistant interprets the language and then executes the action, having to reference the data online.

Sundar Pichai, introducing the demo, said that typically there was 100GB of language interpretation data needed, but that Google had managed to shrink this to 0.5GB, meaning that it can be housed on the device, rather than in the cloud.

This means that Google will be able to offer an Assistant experience with much lower latency. Moving on from hotword responses and onto continuous conversation, it means you can string together a range of actions, seamlessly, without delay - and without saying "Ok Google" every time.

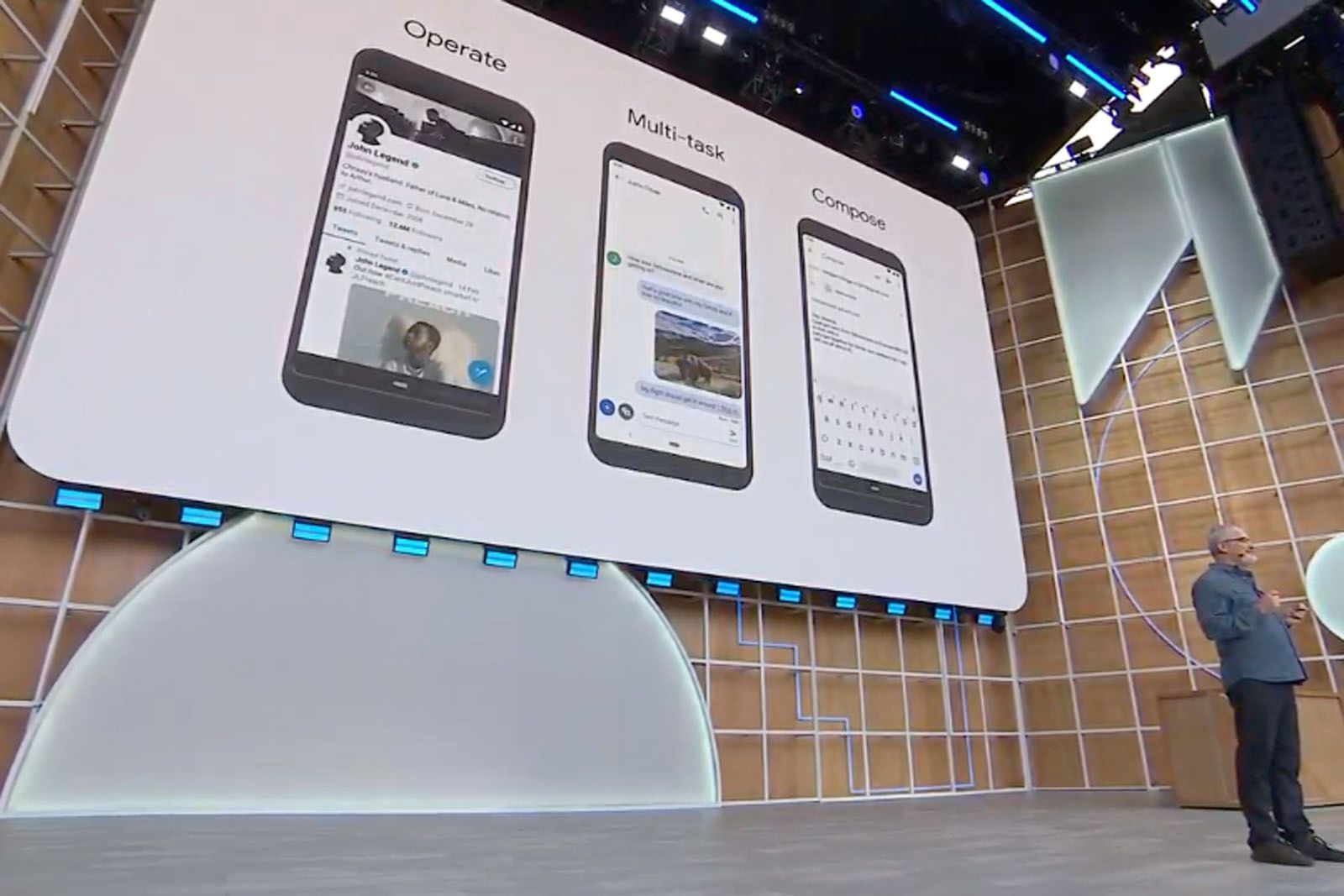

The demonstration showed a collection of voice-controlled events, opening a range of apps, getting information, all happening instantly as part of one conversation.

The best part is the integration across apps, meaning you could, for example, use voice to reply to a message, send it, open photos, search, pick a new image and send that over to the person you're messaging.

It's an impressive evolution of a system that's been smart but a little clunky in the past. Fortunately, this next-gen version of Google Assistant isn't too far down the line - it will be coming to Pixel devices later in the year.

We're betting that aligns with the launch of the Google Pixel 4.

Google Assistant is also getting a driving mode and it will be coming to any phone that has Google Assistant, again, later this year.