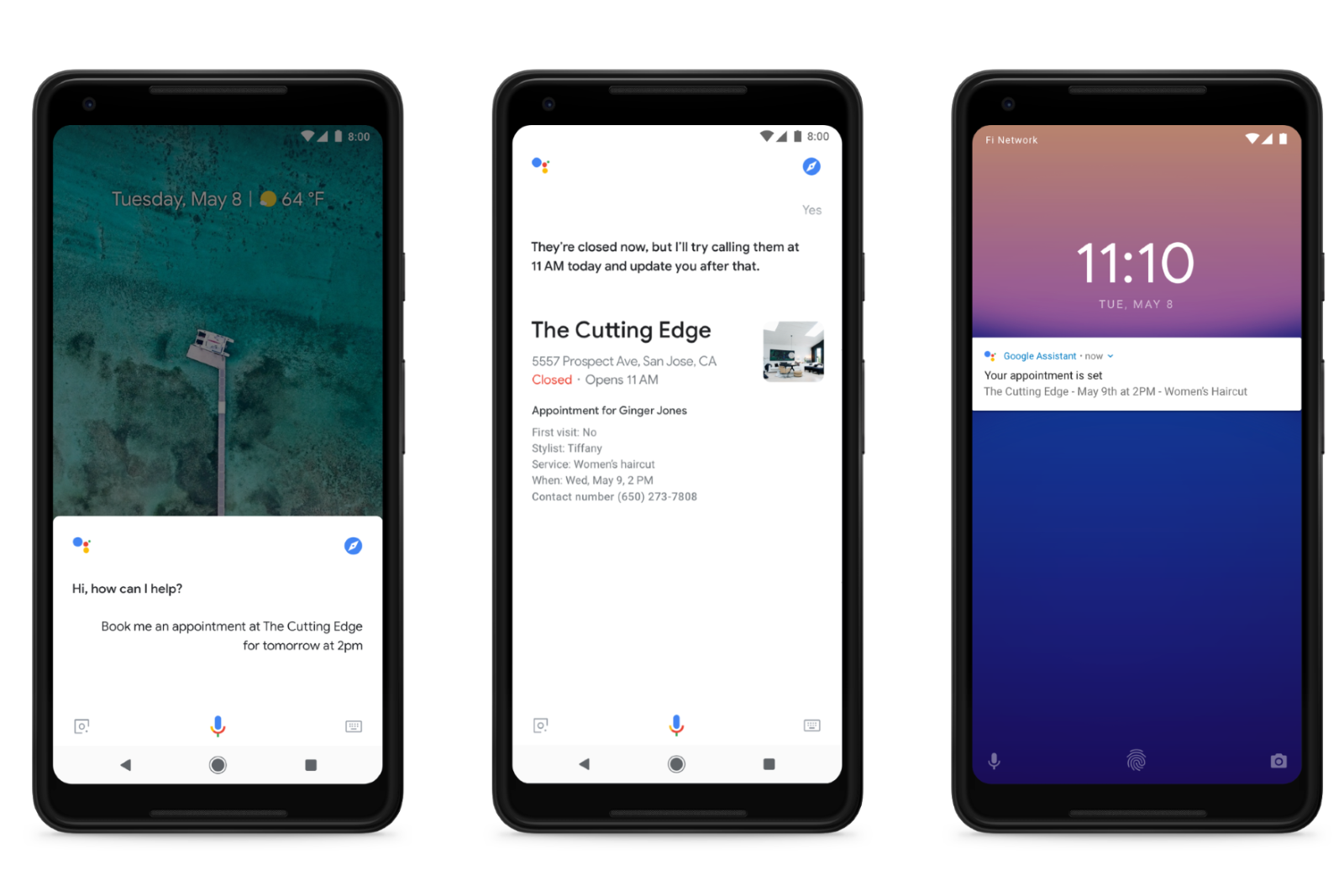

Google Duplex is essentially a name for technologies that will sit inside Google Assistant and, essentially, do stuff on your behalf. The whole idea is to save you time. It can call businesses for you to make reservations, book appointments, get hours of operation and more. It will make these calls with a human-sounding voice that you can hear in the videos below.

There’s no learning curve and no extra step to set up Duplex. If enabled, you can ask the Google Assistant on your phone something like, "Hey Google, call [name of restaurant] and make a reservation for four people on 22 October at 7pm." From that point, Google Assistant can place the call, and Google Duplex will talk to whoever answers the phone at the restaurant. And what's more, it all happens in the background.

Duplex will also add your reservations and appointments to your calendar. “Allowing people to interact with technology as naturally as they interact with each other has been a long-standing promise,” said Google head of engineering Yossi Matias at the time of the original announcement of Duplex in 2018.

“The Google Duplex technology is built to sound natural, to make the conversation experience comfortable. We hope that these technology advances will ultimately contribute to a meaningful improvement in people’s experience in day-to-day interactions with computers.”

In 2020, during Google's Search On event, the company shared an update on how Duplex and Google Assistant are helping people from providing more accurate business information in products like Google Maps, to booking appointments and reservations, to waiting on hold.

Google said that Duplex conversational technology is now calling businesses to automatically update business listings on Search and Maps with modified store hours and details like takeout or no-contact delivery.

"We began using Duplex to automatically update business information and add it to Search and Maps at scale in the US [in 2019] That means business owners don’t have to worry about manually updating these details, and potential customers get access to the most accurate information." said Yossi Matias, head of engineering at Google, in a blog post.

"We still have a way to go towards having truly natural-feeling conversations with machines, so it’s exciting to see the great progress across the industry in neural speech recognition and synthesis and in our own new language understanding models.

"For Duplex, these and many other advancements translate into significant improvements in quality. In fact, 99 percent of calls made by Duplex today are entirely automated. "

Where Duplex has rolled out so far

The rollout of Google's Duplex tech is still limited to the US and although Google promised to take "a slow and measured approach" with the feature, it's now available on devices aside from Google Pixel phones.

In March 2019 Google announced it would also be available in 44 states after an initial beta period that had included only four US cities. Presumably, it is dependent on a lot of localisation to bring it to the UK, for example.

Duplex for the web

Duplex for the Web was announced at Google I/O 2019. With this, Duplex goes beyond speech and can now fill out information and forms for you across several pages. Often when you book things online, you have to navigate a number of pages, pinching and zooming to fill out all the forms.

With Duplex for the web, Assistant will essentially act on your behalf to fill out stuff according to your usual preferences - Google demonstrated how Duplex can book a car - see the video below. Google's Sundar Pichai: [Filling out forms is] "time-consuming and if you lose users in the workflow then businesses lose out as well. Our system can do it better".

Should AI really pretend to be human?

Beyond the wish to create a better experience with Artificial Intelligence (AI), Duplex raises some concerns that, firstly, such a capability within Google Assistant would make us pretty lazy and secondly, there was a significant concern at the potential of Duplex to mislead those who are being called on your behalf.

It’s perfectly possible that Duplex might admit to the person being called that it is actually a computer calling them. Does it matter if the interaction is as natural as with a human?

That’s open to debate though it was clear that Google has tried to make the experience as natural as possible, going a bit overboard with filler language such as “er” or “um” in the sample calls.

Such speech disfluencies are used by humans to build in thinking time and that’s also the case here; disguising that the system is still thinking, too. Google adds that while we expect some things to be instantly answered – such as when we first say hello on a phone call – it’s actually more natural to have pauses elsewhere.

“It’s important to us that users and businesses have a good experience with this service,” continues Matias. “Transparency is a key part of that. We want to be clear about the intent of the call so businesses understand the context.

Here's the Google Duplex demo in action during the original 2018 Google I/O keynote talk:

Can we trust AI yet?

Another problem with Duplex is that our experiences with virtual assistants and other voice control systems have led us to mistrust them. Or, at least, not trust them completely.

There’s the obvious concern that you might not get the result you wanted from a virtual assistant you’d tasked to book a table for you. Would it be at the right time and even in the right restaurant? Where the system detects that it hasn’t been able to get the desired result, Google’s idea is that it will be honest and flag this to you.

While there’s no rational reason these details should be wrong, the temptation as a human is to be mistrust that a virtual assistant would be able to get everything right – would it really be able to interpret the nuances of language that accurately?

Google argued on stage and again in the supporting Google Duplex blog post that the idea behind the system is to carry out very specific tasks such as scheduling a hair appointment or book a table. Unless trained to, it can’t suddenly call your doctor and start to have a chat.

This stuff is actually pretty complicated

Natural language is hard to understand, while the speed of conversation requires some pretty fast cloud computing power. People are used to having complex interactions with other humans that, says Matias, can be “more verbose than necessary, or omit words and rely on context instead. [Natural human conversations] also express a wide range of intents, sometimes in the same sentence.”

Google says that other challenges to the technology are the background noise and poor call quality that is a hallmark of many phone calls plus people tend to speak quicker if they’re talking to another human than they would if they thought they were giving voice commands to a computer.

Context is all-important, too, of course, and we tend to make contextual connections that computers traditionally don’t. So during a restaurant booking, the human might say a number which could mean the time or it could mean the number of people.

Google says it is combatting these challenges with the use of a recurrent nural network that’s idea for a series of inputs as you would get during a phone conversation. The system still uses Google’s own Automatic Speech Recognition (ASR) technology and layers on the nuances of that particular conversation; what’s the aim of the conversation? What’s been said previously?

What are the benefits of Duplex?

There are several benefits to the Duplex technology, argues Google (beyond helping out busy people). Firstly, it could benefit businesses who don’t have online booking systems as users can still book appointments online and they will also get reminders about that appointment from Assistant, leading to fewer missed appointments.

Secondly, it can make specific local data online more accurate as Google is already demonstrating by using Duplex to call stores and ask them for opening times.

Google says that current human-computer voice interactions don’t engage in a conversation flow and force the caller to adjust to the system instead of the system adjusting to the caller.

And, of course, it could help those who have difficulty using the phone because of a disability.

Liked this? Check out Google Assistant Easter Eggs: Your complete guide to funny Assistant commands