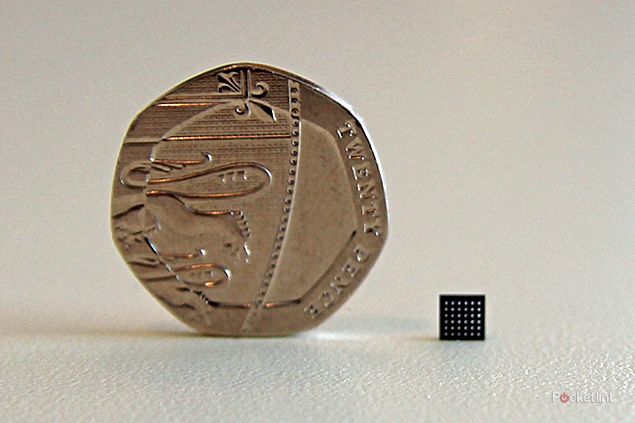

Audience has pulled the covers off the earSmart eS325 chip, which the company claims will make your voice calls and audio capture sound much better than previously.

You probably haven't heard of Audience. Founded in 2000, Audience supplies the hardware chips that handle voice and audio processing in mobile devices.

Since the company started shipping its processors in 2008, more than 250 million have found their way into devices, currently sitting in smartphones like the Samsung Galaxy S III and Motorola RAZR i, and the Nexus 10 tablet.

As our device become smarter, voice and audio capture is becoming more important: video calling, dictation, voice recognition functions and the soundtrack to your spectacularly sharp 1080p video all rely on, or could benefit from, audio processing.

Audience's chips are a hardware processor that sits between the mic and the rest of the device, so before your audio appears in the application, the processor has had a chance to work its magic. We sat down with Audience to see exactly what this hardware does.

We're all familiar with noise suppression or noise cancellation, but it's impressive what this sort of chip can achieve. We went through a series of tests, with different background distractors to see how well the Audience earSmart chip could clean things up. Suffice to say, it's hugely effective in dealing with things like music or café noise, picking out the speaker's voice and providing a nice clear and natural conversation for the caller.

With the launch of the earSmart eS325, Audience is claiming a huge leap in effectiveness too. It uses the company's third-gen voice algorithm to pick out what should be heard and what shouldn't.

This is becoming more important as wide-band audio, or HD Voice, is becoming more prevalent, as the wider band of capture also pulls in more unwanted background noise.

One of the interesting aspects, as Robert Schoenfield, VP of marketing at Audience, explained, is that devices are tuned differently in different territories. In the US, networks like AT&T specify that devices should have almost no background noise. However, in Europe, it's commonplace to allow a little background noise in and these preferences are set at a device level.

Typically, the user has no control over the noise suppression, although Schoenfield pointed out that in some devices like the Samsung Galaxy S III, there's the option to turn off noise cancellation.

On the topic of things like voice recognition, the Audience chip will also improve the transmission to services such as Google Voice. Because it's operating before you hit the baseband processor (for voice calls) or the application processor (for data services like Google Voice), you'll benefit form cleaner audio across everything you do with your device.

The new earSmart aS325 improves on the older generation of hardware, also bringing three-mic support and de-reverb, so when you’re in an empty space, it can cut out that hollow sound that often comes across. The new hardware will also enable voice zoom, so you can conduct and interview in a noisy environment and the voices will be picked out and come across clearly.

All this talk is good, but when will devices be appearing with the new hardware in?

"We’re not in position to pre-announce devices," said Schoenfield, "but we will be in devices in the next 60 days." The official line is from the end of April 2013.

Considering that our demo involved the Samsung-built Nexus 10 and the Samsung Galaxy S III, and that the last-gen hardware is in the Samsung Galaxy Note I and Note II, it’s a pretty safe guess it will be in the forthcoming Samsung Galaxy S IV, due to launch on 14 April 2013.