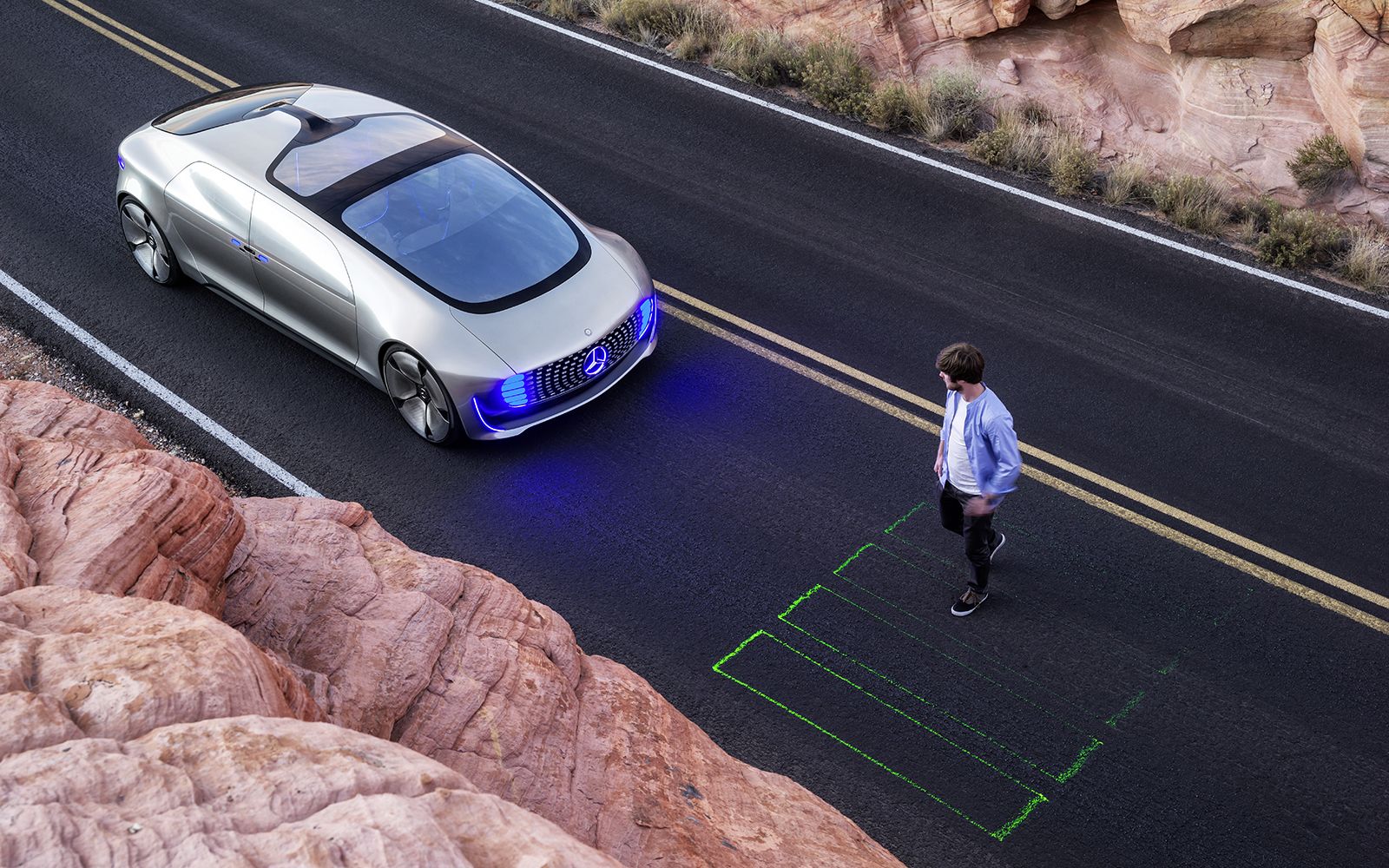

Self-driving cars are coming. Cars that can make millisecond decisions will hold our lives in their processors. Are we ready for that?

A classic philosophical problem posits: You're a train controller with a tram full of children about to crash, you can divert it onto another track but your son, who came to work with you that day, is on that track. Save your son or save a tram full of children.

As humans we don't know what we'll do until we're in that situation – hoping we will never have to choose. These autonomous cars will have to be pre-programmed with that choice.

So will you be prepared to let a car drive you when it's programmed to veer off a mountain to your death, if it means dodging a mother and her baby?

The University of Alabama has an Ethics and Bioethics team dealing with this type of issue right now.

They say to be able to make a decision a computer program would need to know all ethical sides of a decision but "a computer cannot be programmed to handle them all".

This was tested when St Thomas tried to give an answer in advance for all problems in medicine. "It failed miserably, both because many cases have unique circumstances and because medicine constantly changes," said Gregory Pence, Ph.D., chair of the UAB College of Arts and Sciences Department of Philosophy.

Perhaps the alternative is to allow the driver to pre-program the choice in advance, before setting off. Or will it become a legal issue that the government or even car manufacturers decide?

The future offers a rocky road for autonomous vehicles. Here's hoping the cars will be smart enough to navigate that road safely.